Linear Visual Autoregressive Modeling via Cached Token Pruning

ICCV 2025

ICCV 2025

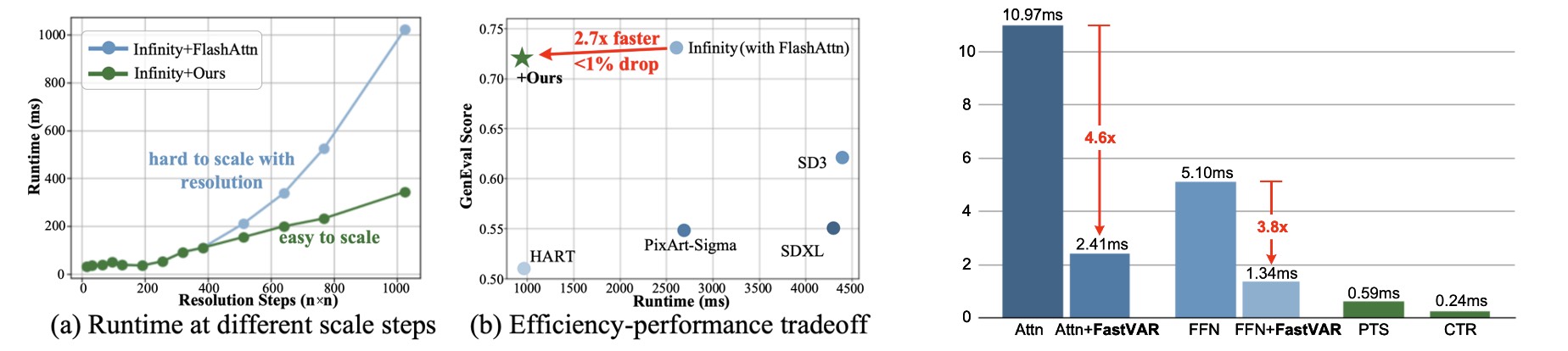

Visual Autoregressive (VAR) modeling has gained popularity for its shift towards next-scale prediction. However, existing VAR paradigms process the entire token map at each scale step, leading to the complexity and runtime scaling dramatically with image resolution.

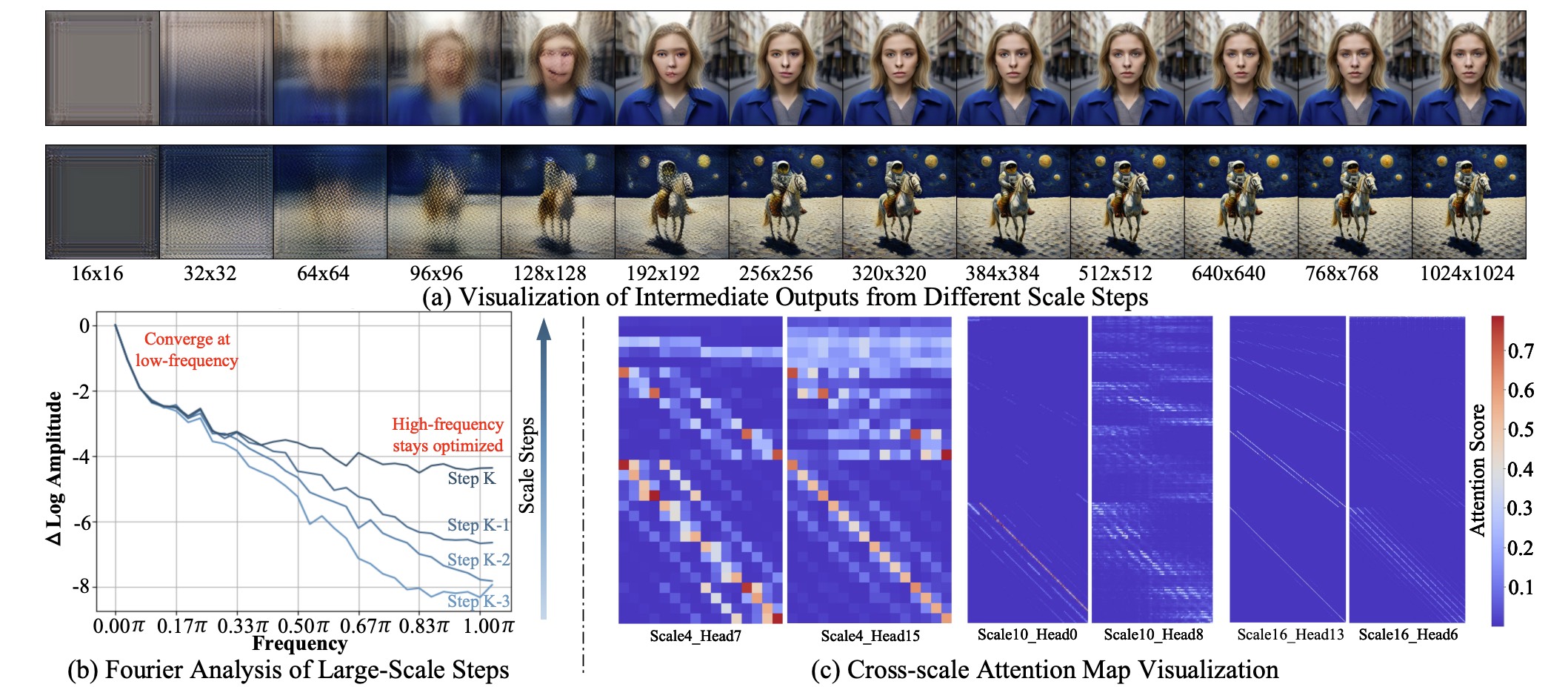

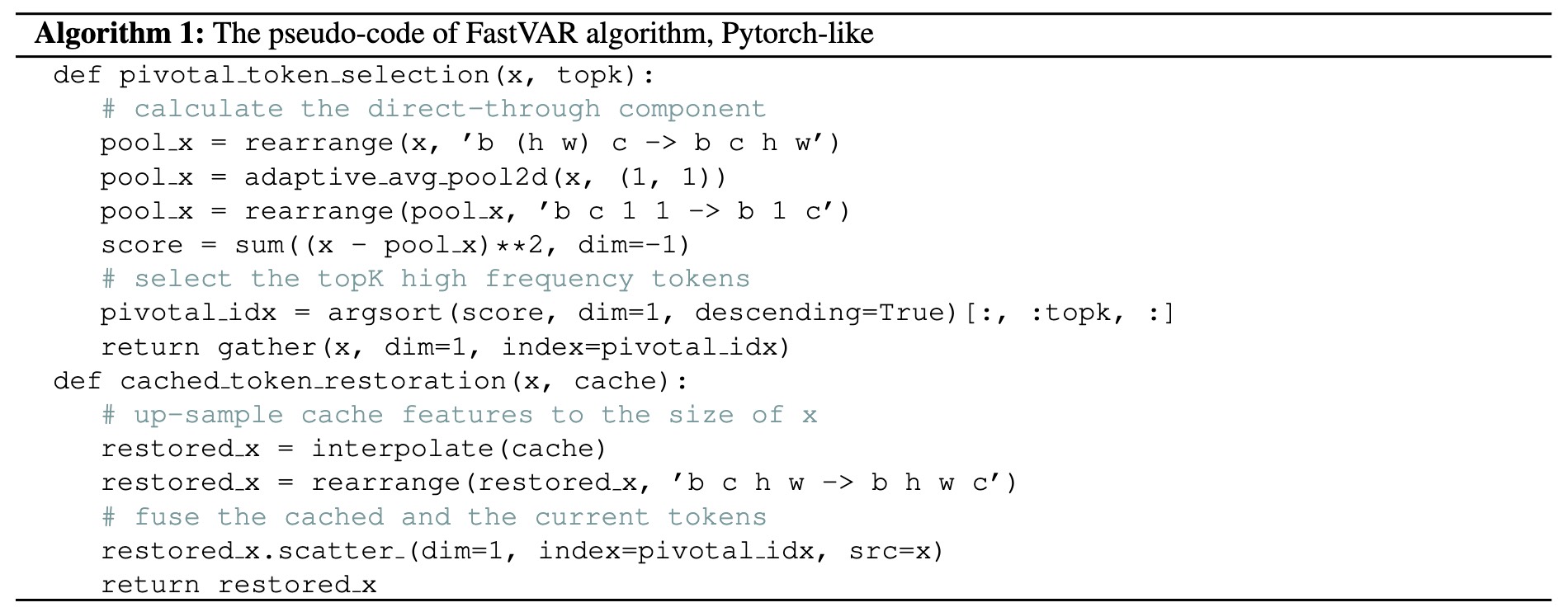

To address this challenge, we propose FastVAR, a post-training acceleration method for efficient resolution scaling with VARs. Our key finding is that the majority of latency arises from the large-scale step where most tokens have already converged. Leveraging this observation, we develop the cached token pruning strategy that only forwards pivotal tokens for scale-specific modeling while using cached tokens from previous scale steps to restore the pruned slots. This significantly reduces the number of forwarded tokens and improves the efficiency at larger resolutions.

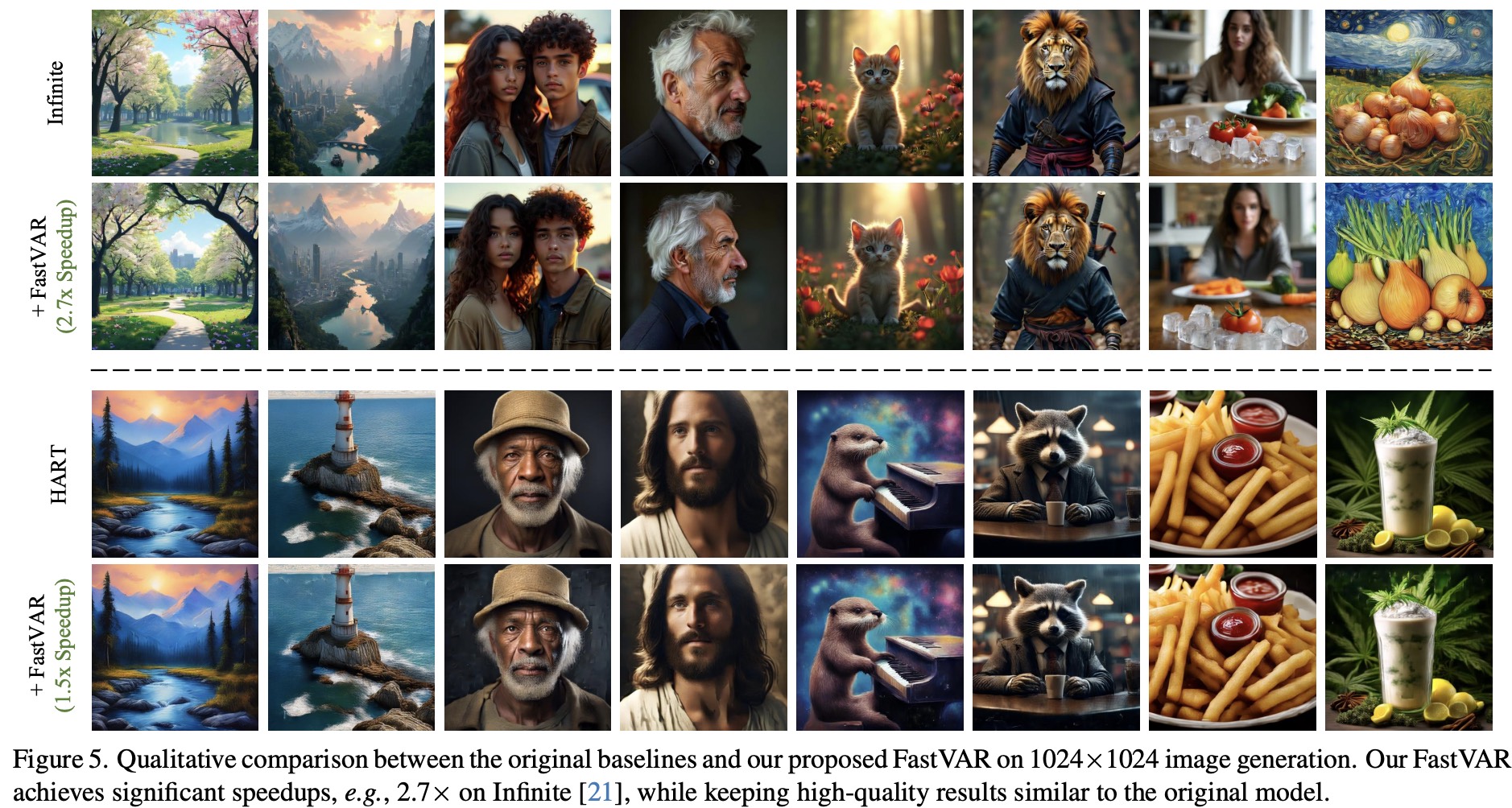

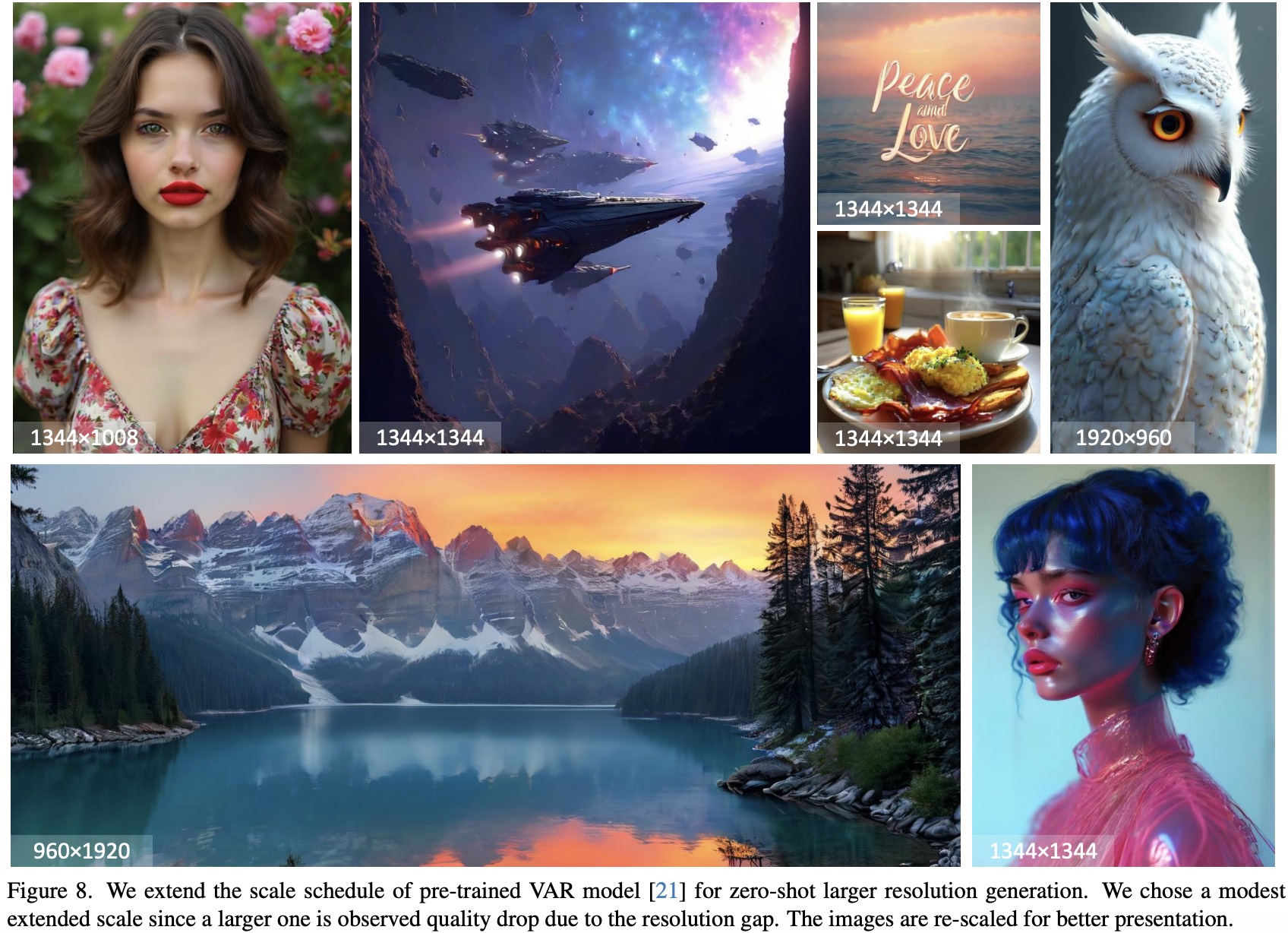

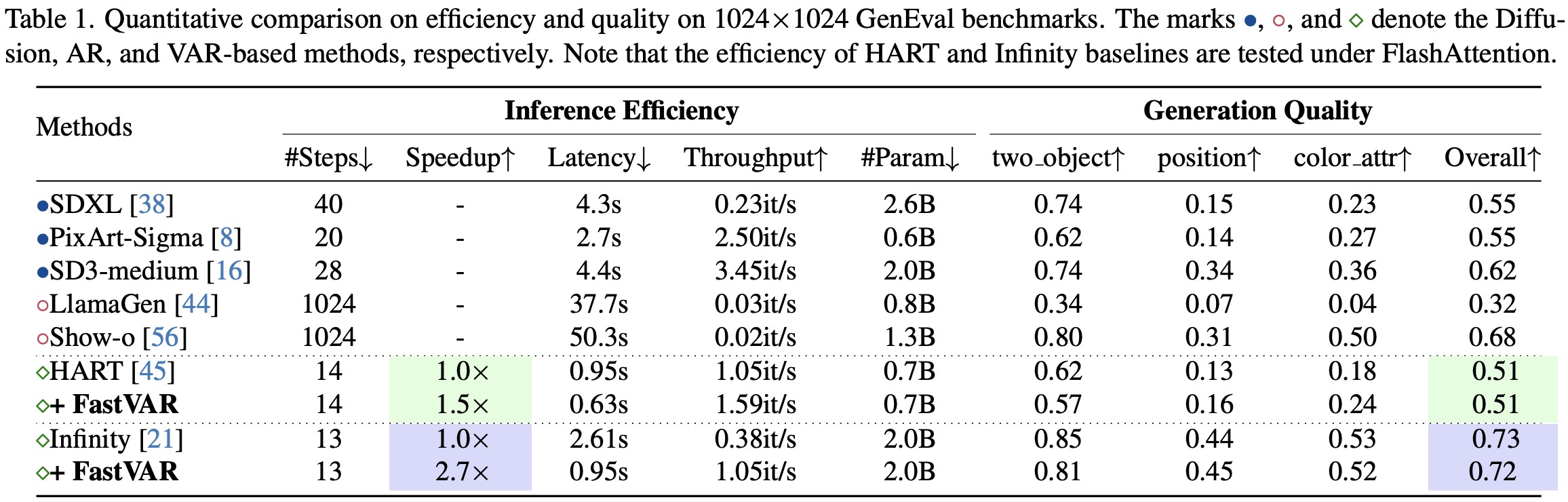

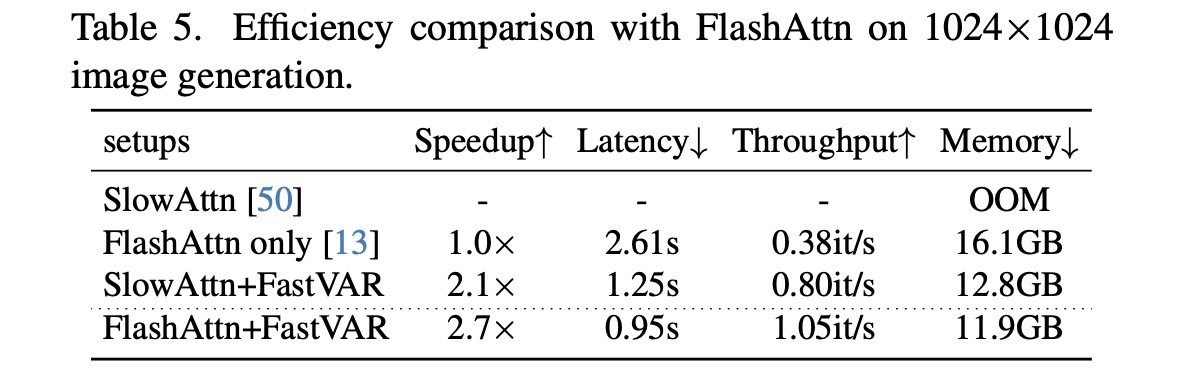

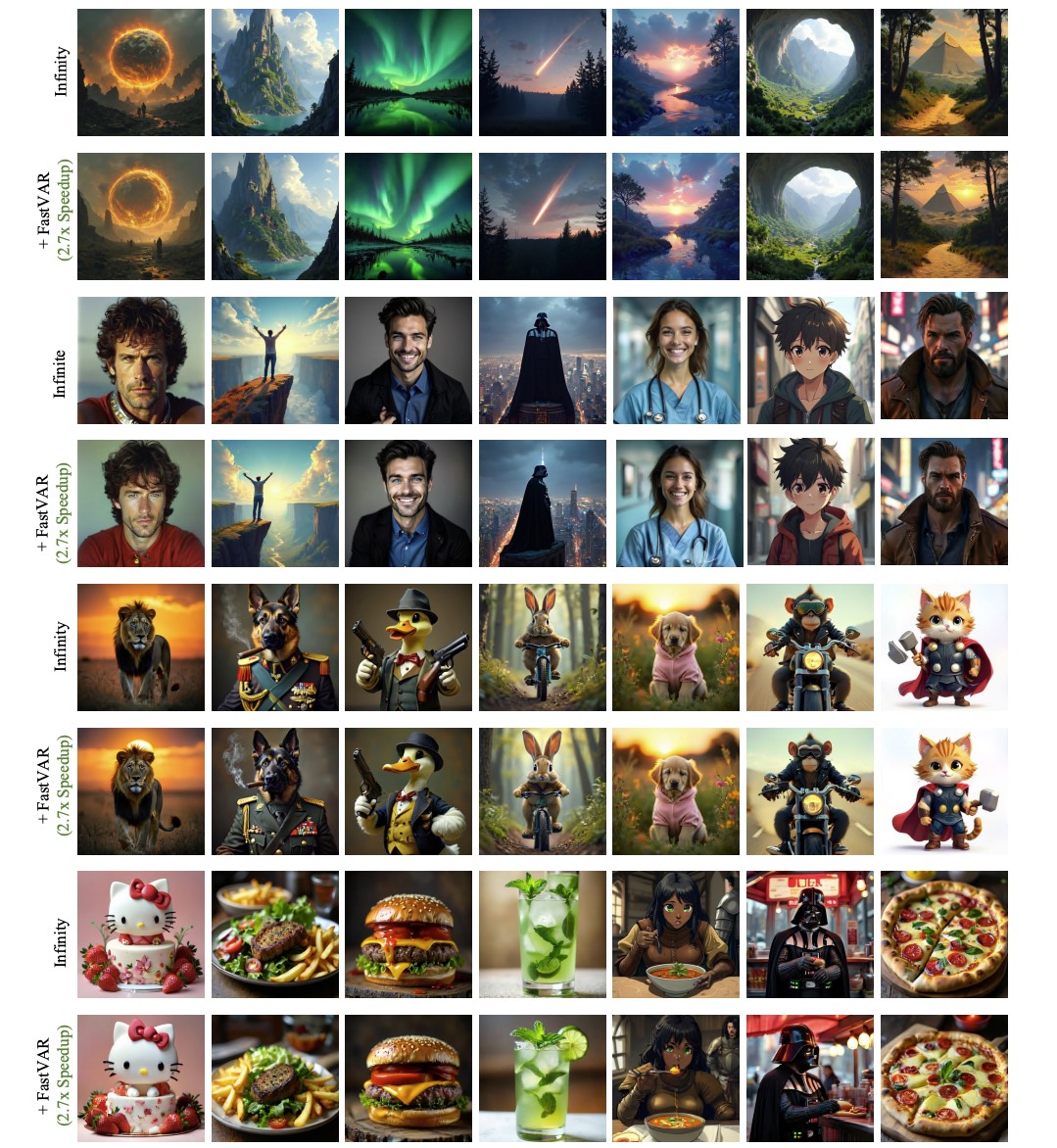

Experiments show the proposed FastVAR can further speedup FlashAttention-accelerated VAR by 2.7x with negligible performance drop of less than 1%. We further extend FastVAR to zero-shot generation of higher resolution images. In particular, FastVAR can generate one 2K image with 15 GB memory footprints in 1.5 s on a single NVIDIA 3090 GPU.

FastVAR introduces the "cached token pruning" which is training-free and generic for various VAR backbones.

FastVAR is developed based on the three interesting findings with pre-trained VAR models:

More illustrations can be seen in our paper.

Our FastVAR can achieve 2.7x speedup with less than 1% performance drop, even on top of Flash-attention accelerated setups.Detailed results can be found in the paper.

Our FastVAR is robust under even extrame pruning ratios.

Without any training, our FastVAR can find meaningful pruning strategies.

@article{guo2025fastvar,

title={FastVAR: Linear Visual Autoregressive Modeling via Cached Token Pruning},

author={Guo, Hang and Li, Yawei and Zhang, Taolin and Wang, Jiangshan and Dai, Tao and Xia, Shu-Tao and Benini, Luca},

journal={arXiv preprint arXiv:2503.23367},

year={2025}

}